Getting Starting with LangChain Agents

Agents with LangChain

In this post we will get started with building agents using Langchain. For those who are new to langchain it is one of the most population opensource library for building generative AI applications.

While the project itself is opensource it is backed by a commercial entity Langchain.com

Licensing

Langchain is available under MIT License

LangChain Ecosystem

Langchain is composed of various opensource projects and some commercial offerings on the top.

Various Projects

- LangChain: LangChain helps developers build applications powered by LLMs through a standard interface for models, embeddings, vector stores and more.

- Langsmith Observability, Evaluation and Prompt Engineering platform

- Provider Integrations: Libraries to provide support for third party LLMs, embedding models, chat models, tools and toolkits. We will cover this in more details in one of the subsequent posts.

Installation

If we want to get started with Python based API you can use pip to install langchain

pip install langchain

Depending on the use case and the underlying provider, you might have to use additional packages e.g langchain-anthropic or langchain-openai.

Building your first Agent

Let us start by building the first agent. We will be using Langraph which is an extension of LangChain for building agents. It supports multiple architectures, which we will look at in subsequent blogs

For now we will start with prebuilt agent

from langgraph.prebuilt import create_react_agent

What is create_react_agent?

A prebuilt helper that creates a ReAct-style agent. ReAct = Reason + Act.

- Reasoning → model decides what to do.

- Action → calls a tool.

- Observation → receives tool output.

Loops until a final answer.

Create a function to get Weather

We will just make a static function to focus on the Agent

def get_weather(city: str) -> str:

"""Get weather for a given city."""

return f"It's always sunny in {city}!"

Instantiate the Agent

Give the Anthropic claude 3.7 model, pass the get_weather function as a tool and a simple prompt

agent = create_react_agent(

model="anthropic:claude-3-7-sonnet-latest",

tools=[get_weather],

prompt="You are a helpful assistant"

)

Invoke the Agent and extract the messages

We will call agent.invoke with the relevant parameters:

# Run the agent

output = agent.invoke(

{"messages": [{"role": "user", "content": "what is the weather in sf"}]}

)

messages = output['messages']

- messages → a chat-style message history.

- role → “user” means this came from the end-user.

- content → the actual user’s query.

We wrote a utility function to extract various types of messages.

for m in messages:

type_name = type(m).__name__

if type_name == 'HumanMessage':

content = m.content

elif type_name == 'AIMessage':

type_content = type(m.content).__name__

if type_content == 'str':

content = m.content

else:

content = m.content[0]['text']

elif type_name == 'ToolMessage':

content = m.content

else:

content = m.content

print(type_name,": ",content)

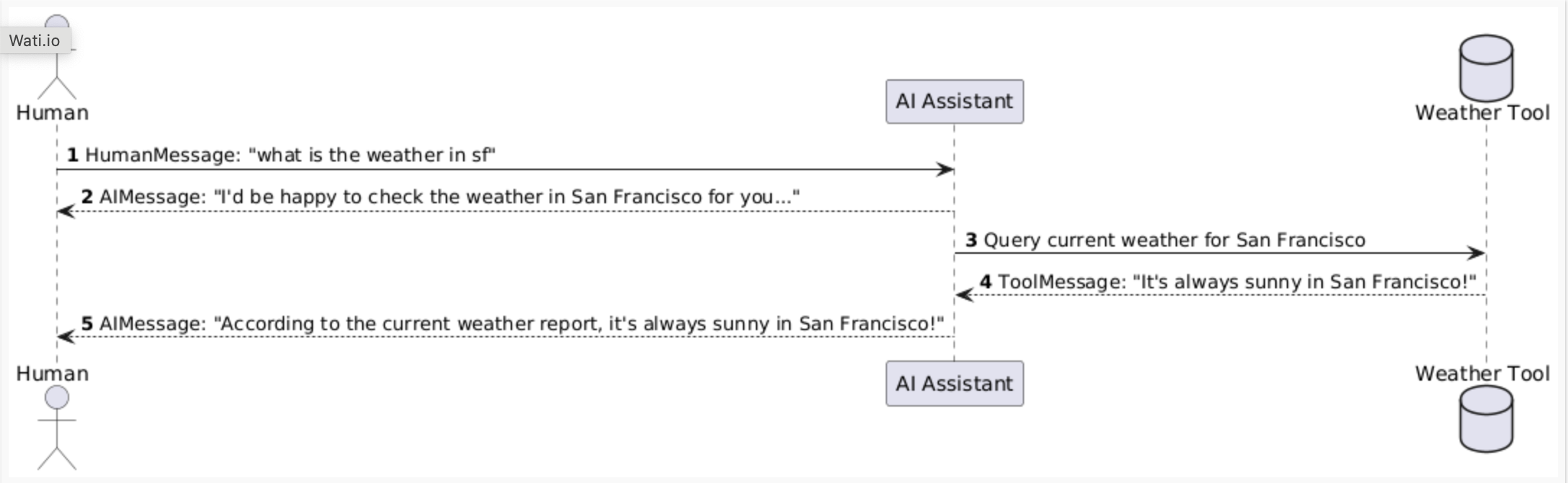

Output will be ..

HumanMessage : what is the weather in sf

AIMessage : I'd be happy to check the weather in San Francisco for you. Let me get that information for you right now.

ToolMessage : It's always sunny in San Francisco!

AIMessage : According to the current weather report, it's always sunny in San Francisco! Enjoy the beautiful weather if you're there or planning to visit.

We generated PlantUML for the interaction.

Complete Source code link