Orchestrating Salesforce with LangGraph + OpenAI

Large Language Models shine at orchestration—deciding what needs to happen and when. In this post, we’ll turn a single English instruction like:

“Find the Account, open a high-priority Case, then schedule a follow-up Task for tomorrow.”

…into real (or mocked) Salesforce actions using LangGraph, LangChain OpenAI, and three tiny tools.

What you’ll build

- A Salesforce agent that:

- queries Accounts with SOQL

- creates a Case

- creates a follow-up Task

- A graph-driven loop (

assistant ↔ tools) that stops automatically when no more tool calls are needed - A script that runs with or without a live Salesforce org (mock mode included)

Prerequisites

- Python 3.9+

- An OpenAI API key configured for

langchain_openai(e.g.,OPENAI_API_KEY) - (Optional) Salesforce credentials to use a real org:

USER_NAME,PASSWORD,SECURITY_TOKENSF_DOMAIN=login(prod) ortest(sandbox)

Install

pip install langgraph langchain-openai langchain-core simple-salesforce python-dotenv

If you won’t hit a real org, you can skip

simple-salesforce. The script will auto-mock.

Architecture at a glance

flowchart LR

START([START]) --> A[assistant node

LLM + tools bound]

A --> D{tool call?}

D -->|yes| T[tools node

executes selected tool]

D -->|no| END([END])

T --> A

- assistant node: Runs GPT-4o with tools bound; may emit tool calls.

- tools node: Executes whichever tool the model called.

- tools_condition: Routes between nodes; ends the run when no tool call is present.

Sequential calls are enforced (parallel_tool_calls=False) so writes happen in a safe order: SOQL → Case → Task.

The three tools (why these?)

1) SOQL Query — look up IDs before writes

# --- Tool 1: SOQL query

def sf_query_soql(query: str) -> List[Dict[str, Any]]:

\"\"\"

Run a SOQL query. Returns a list of records (dicts).

\"\"\"

if USE_REAL_SF and sf_client:

res = sf_client.query(query)

return res.get("records", [])

# Mock result

if "FROM Account" in query and "Acme" in query:

return [{"Id": "001xx000003AcmeAAA", "Name": "Acme Corp"}]

return []

2) Create Case — tie to the Account

def sf_create_case(account_id: str, subject: str, priority: str = "Medium",

origin: str = "Web") -> Dict[str, Any]:

\"\"\"

Create a Case tied to an Account.

\"\"\"

if USE_REAL_SF and sf_client:

res = sf_client.Case.create({

"AccountId": account_id,

"Subject": subject,

"Priority": priority,

"Origin": origin,

"Status": "New"

})

# Return a consistent shape

return {"success": res.get("success", False), "id": res.get("id")}

# Mock

return {"success": True, "id": "500xx00000MockCase"}

3) Create Task — tie to the Case (WhatId) with a default due date of tomorrow

# --- Tool 3: Create Task

def sf_create_task(what_id: str, subject: str, due_date_iso: Optional[str] = None,

status: str = "Not Started", priority: str = "Normal") -> Dict[str, Any]:

\"\"\"

Create a Task (activity). 'what_id' can be Case Id, Opportunity Id, etc.

\"\"\"

if due_date_iso is None:

due_date_iso = (datetime.utcnow() + timedelta(days=1)).strftime("%Y-%m-%d")

if USE_REAL_SF and sf_client:

res = sf_client.Task.create({

"WhatId": what_id,

"Subject": subject,

"ActivityDate": due_date_iso,

"Status": status,

"Priority": priority

})

return {"success": res.get("success", False), "id": res.get("id")}

# Mock

return {"success": True, "id": "00Txx00000MockTask", "due": due_date_iso}

That’s enough to compose a multi-step business action from a single sentence.

Langchain Model with Tools

# --- LangChain model with tools

tools = [sf_query_soql, sf_create_case, sf_create_task]

llm = ChatOpenAI(model="gpt-4o")

# Sequential tool calls are safer for multi-step SF workflows

llm_with_tools = llm.bind_tools(tools, parallel_tool_calls=False)

What this does

You first declare the three callable “tools” the model is allowed to use: sf_query_soql (reads with SOQL), sf_create_case (writes a Case tied to an Account), and sf_create_task (writes a Task tied to a Case via WhatId). Then you construct a GPT-4o client through LangChain. The final line binds those tools to the model so the LLM can emit structured function calls with JSON arguments at the right moments in a conversation.

Why set parallel_tool_calls=False

Salesforce actions here are order-dependent: you must query the Account to get its Id, create the Case to get its CaseId, and only then create the Task pointing to that Case. Disallowing parallel calls enforces one tool call per turn and avoids race conditions or partial writes, which makes the workflow safer and easier to audit.

How it plays out at runtime

The user asks in plain English; the model decides it needs an Account Id and calls sf_query_soql. With that Id it calls sf_create_case, receives a CaseId, and then calls sf_create_task using WhatId=CaseId (optionally setting a due date). When no more tools are needed, the model replies in natural language summarizing what it did and the relevant IDs.

When you might allow parallel calls

If you’re doing purely read-only fan-out (for example, several independent SOQL queries) or truly independent, idempotent writes with guardrails, parallel calls can speed things up. For most CRM write paths, keep them sequential.

Implementation` notes

Keep tool inputs and outputs simple and consistent (for example, always return {"success": ..., "id": ...} for writes), and remind the model in your system prompt to query for IDs before creating records. This reduces ambiguity and helps the agent make correct, minimal calls.

Agent control flow

System message, Assistant Node, and Graph Wiring

# --- System message (keeps the assistant focused)

sys_msg = SystemMessage(content=(

"You are a Salesforce assistant. Given natural language tasks, decide when to:\\n"

"- Query Accounts/records via SOQL\\n"

"- Create a Case associated with an Account\\n"

"- Create a Task (follow-up) associated with a Case\\n"

"Ask for missing details if essential. Use tools to get IDs before creating records."

))

System message

SystemMessage defines the assistant’s role and allowed actions. It restricts the model to Salesforce tasks (SOQL queries, Case creation, Task creation) and instructs it to request missing inputs and to obtain record IDs via tools before attempting writes. The explicit newline escapes (\\n) format the instruction as bullet-like lines inside a single message.

Assistant node

assistant(state) runs one LLM step. It prepends the fixed sys_msg to the current conversation (state["messages"]) and invokes llm_with_tools. The returned AIMessage may include a structured tool call (e.g., calling the SOQL tool with JSON arguments) or a final natural-language response. This node does not execute tools; it only produces the next assistant message.

# --- Assistant node (unchanged structure)

def assistant(state: MessagesState):

return {"messages": [llm_with_tools.invoke([sys_msg] + state["messages"])]}

Graph construction

StateGraph(MessagesState) declares a graph whose state is a list of messages. Two nodes are registered:

"assistant": the function above that runs the LLM with tools enabled."tools": aToolNodethat executes whichever tool the LLM requested.

# --- Build the graph

builder = StateGraph(MessagesState)

builder.add_node("assistant", assistant)

builder.add_node("tools", ToolNode(tools))

builder.add_edge(START, "assistant")

builder.add_conditional_edges("assistant", tools_condition) # routes to tools if the LLM called one

builder.add_edge("tools", "assistant")

react_graph = builder.compile()

Edges define execution order:

START → "assistant"begins each run with an LLM step.- A conditional edge from

"assistant"usestools_conditionto inspect the latest message: if it contains a tool call, control routes to"tools"; otherwise, the run ends. "tools" → "assistant"feeds tool outputs back into the conversation, allowing the LLM to take another step with fresh results.

builder.compile() produces react_graph, a runnable artifact you can invoke with an initial {"messages": [...]} payload.

Execution flow (diagram)

flowchart TB

START([START]) --> A[assistant LLM+tools]

A --> D{tool call?}

D -->|no| END([END])

subgraph TOOLS[Tools executed in order]

direction LR

Q[sf_query_soql] --> C[sf_create_case] --> T[sf_create_task]

end

D -->|yes| Q

T --> A

Putting it in Action

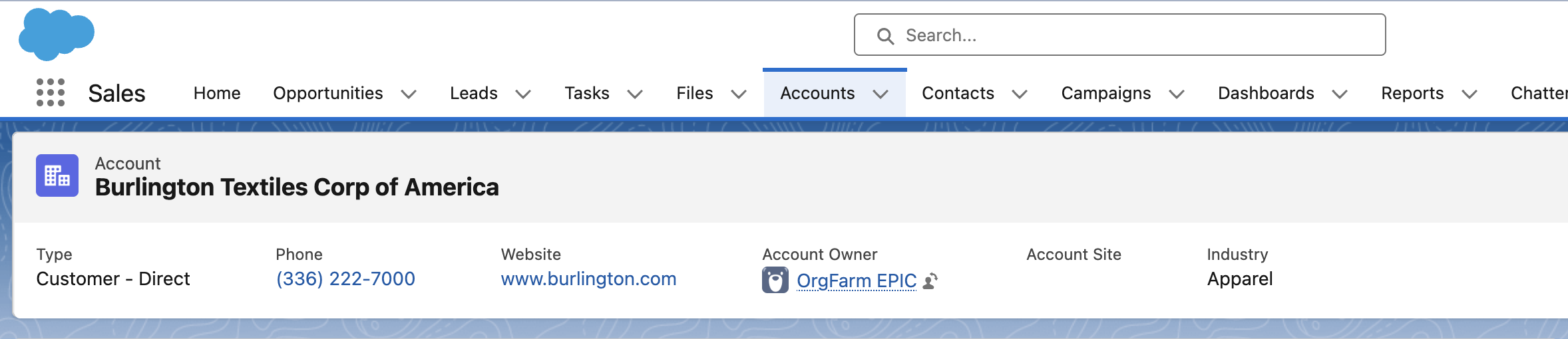

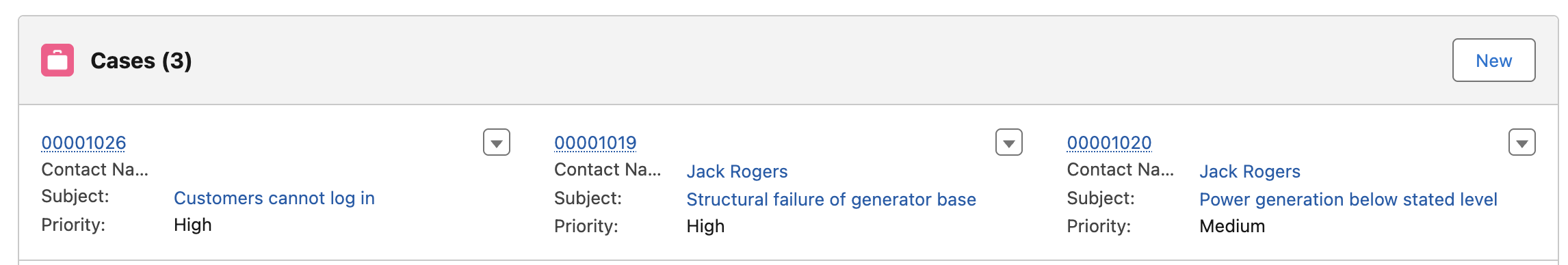

We will find an account called Burlington Textiles Corp of America and create a Case and then create a task.

# --- Run a demo

user_request = (

"Find the Account named 'Burlington Textiles Corp of America'. Create a High-priority Case titled "

"'Customers cannot log in'. Then create a follow-up Task for tomorrow titled "

"'Call customer with workaround' on that Case."

)

messages = [HumanMessage(content=user_request)]

result_state = react_graph.invoke({"messages": messages})

for m in result_state["messages"]:

m.pretty_print()

You will see messages as the system executes relevant tasks.

Verify the appropriate case creation by navigating to the account.

Running the demo

1) Create a .env (optional; enables real Salesforce calls):

OPENAI_API_KEY=sk-...

USER_NAME=you@example.com

PASSWORD=yourSalesforcePassword

SECURITY_TOKEN=yourSecurityToken

SF_DOMAIN=login # or test

2) Run the script (full listing below):

python salesforce_agent.py

3) What you’ll see

- If creds are set and valid:

"[SF] Connected to Salesforce org." - Otherwise:

"[SF] Env vars not set; using mock Salesforce." - The agent will:

- query the Account (SOQL)

- create a Case

- create a Task due tomorrow

- Then it will summarize what it did (IDs, subjects, due date).

Full script (drop-in)

Save as

salesforce_agent.pyand run.

Works in real mode if env vars are present; otherwise falls back to mock.

Complete Source Listing link

Github link

Production hardening (short list)

- Idempotency: store an external key (e.g., hash of

AccountId+Subject+Date) on Case/Task to prevent duplicates on retries. - Validation/Guardrails: add a “policy” tool to validate enums (Priority/Status) before writes.

- Approvals: insert a human-in-the-loop node for high-impact writes.

- Observability: log tool inputs/outputs and persist the graph run id on records.

- Auth: prefer OAuth JWT; don’t commit secrets; rotate tokens.

- Throughput: keep sequential writes; if you must fan-out, batch and back-off for API limits.

Troubleshooting

-

“Env vars not set; using mock Salesforce.”

AddUSER_NAME,PASSWORD,SECURITY_TOKEN, and optionallySF_DOMAINto.env. -

ModuleNotFoundError: simple_salesforce

pip install simple-salesforce -

Sandbox login fails

UseSF_DOMAIN=test. -

No Account found

Constrain SOQL generation via the prompt (e.g., exactName) or adjust the mock to your test data.

Next steps

- Add Case Comments and File attachments as tools.

- Insert an approval node before creating high-priority cases.

- Swap in Salesforce Data Cloud tools for DLO/DLA queries.

- Emit structured run logs to your ops telemetry or a Command Center UI.